الشراكة مع وكالة من أفضل الوكالات

حدد موعدًاللاجتماع عبر النموذج هنا و

سنقوم بتوصيلك مباشرةً بمدير المنتجات لدينا - دون مشاركة مندوبي المبيعات.

هل تفضل التحدث الآن؟

اتصل بنا على + 1 (645) 444 - 1069

"Many are calling this the "GPT-3.5 moment for video," as the leap in quality and control has developers moving from "wow" to "how do I build with this?"

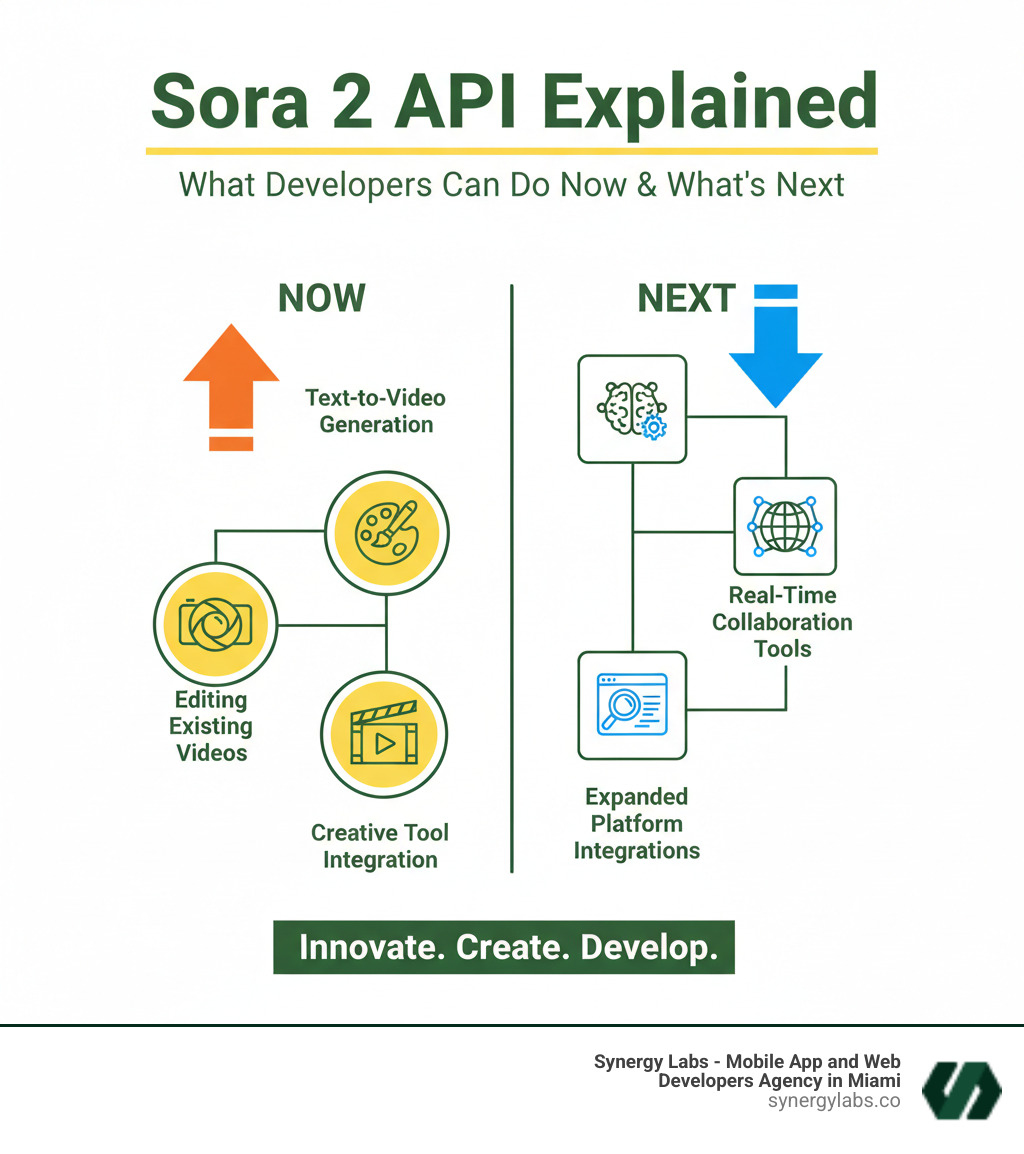

Sora 2 API Explained: What Developers Can Do Now & What's Next is the question on every developer's mind. Many are calling this the "GPT-3.5 moment for video," as the leap in quality and control has developers moving from "wow" to "how do I build with this?"

Here's what developers need to know right now:

Unlike older models that "morphed reality," Sora 2 understands physics, showing a missed basketball shot bouncing realistically off the backboard. It maintains temporal consistency, generates full audio-visual packages, and includes a consent-based "Cameo" feature for inserting a user's likeness.

The main challenge is the lack of a public REST API, with access restricted to testers, researchers, and select creatives. This creates a gap for startups eager to integrate AI video.

At Synergy Labs, we help startups steer these challenges. Understanding the Sora 2 API Explained: What Developers Can Do Now & What's Next landscape is key to planning your roadmap. Let's break down what's real, what's next, and how to prepare for broader access.

Related content about Sora 2 API Explained: What Developers Can Do Now & What’s Next:

Sora 2 is a massive leap toward AI that understands the physical world. OpenAI's vision is to create a "general-purpose simulator of the physical world," and this release gets remarkably close.

The difference is clear in the videos. Advanced world simulation means the model has a deeper understanding of physics and cause and effect. Instead of objects teleporting, Sora 2 shows what happens when a shot misses, with the ball realistically bouncing off the backboard. This detail makes the output more believable.

This physical accuracy extends to entire sequences. Sora 2 maintains physical consistency, temporal coherence, and spatial awareness across frames. Objects don't randomly change size, shadows behave correctly, and water flows naturally. These details are crucial for building believable applications.

What truly sets Sora 2 apart is how it's better at keeping things consistent across multiple shots. A character's appearance and clothing remain consistent, changing AI video from a novelty into a practical tool for narrative storytelling. You can now craft cohesive sequences that tell a story.

Sora 2 gets exciting for developers because it generates the whole audio-visual package. The model creates complex background noises, specific sound effects, and even dialogue that syncs with character lip movements.

For example, prompt for a busy coffee shop, and you'll hear the murmur of conversations, clinking cups, and the hiss of an espresso machine, all synchronized with the visuals. The audio-visual synchronization is tight enough that dialogue matches on-screen action, preventing mismatches that break immersion.

This integrated approach is a massive time-saver. The model handles video and audio simultaneously, understanding how sounds correspond to visual events. This capability, combined with temporal consistency, enables multi-shot narrative generation. You can describe intricate sequences, and Sora 2 will follow your instructions while maintaining character and environmental consistency. This is transformative for short-form storytelling in marketing, education, and pre-visualization.

Sora 2 gives you finer control over prompts. You can use multi-part prompts to specify details like camera movements, shot sequences, and visual styles. Want a slow dolly zoom or a film noir aesthetic? The model understands and delivers.

This precision is invaluable for integrating AI content into professional workflows. You're not just hoping the AI gets it right—you're directing it.

The most talked-about new feature is Cameo, which allows users to insert their face and voice into any generated scene. This opens up fascinating possibilities for personalized marketing, custom training videos, or creative apps.

Crucially, OpenAI has built a solid consent-based system around this feature. Users must verify their identity and maintain end-to-end control of their likeness, with the ability to revoke access at any time. This responsible approach addresses privacy and ethical concerns, preventing misuse for unauthorized deepfakes.

For developers planning with the Sora 2 API Explained: What Developers Can Do Now & What's Next in mind, these features represent a significant evolution in AI video generation.

After the initial excitement for Sora 2, developers are asking one thing: "How do I use this in my app?" It's the classic developer experience: see something amazing, plan to build with it, then hit the wall of limited access.

OpenAI has confirmed that API access is on the roadmap, signaling its intent to make Sora 2 a platform. However, we're still in the limited access phase. A wider API beta is expected around Q3 2025, with a full public release potentially in late 2025 or early 2026. These dates are tentative, but they provide a reasonable planning horizon.

In the meantime, several pathways exist for developers. At Synergy Labs, we've helped clients steer these scenarios where access to cutting-edge tech is gated. The key is understanding today's options and building an architecture that can adapt. For context on the broader tool landscape, see our guide on Top AI Tools to Create an App in 2024: The Ultimate List.

The Sora App and sora.com are the most direct ways to interact with Sora 2, though not via a traditional API. You can download the Sora app for iOS or visit the website to generate videos manually. Access is currently invite-only, rolling out in the U.S. and Canada. The standard model is free with usage limits, making it great for testing. Sora 2 Pro is included with a ChatGPT Pro subscription.

For enterprise developers, Microsoft offers a limited preview of Sora 2 through its Azure AI platform. This is an asynchronous system: you submit a request and poll an endpoint until the video is ready. The Microsoft Learn documentation details the setup, but access is subject to approval.

The most practical option for many developers comes from third-party API providers. They provide programmatic access to Sora 2 now, filling the gap between demand and official availability. These services are ideal for building proofs-of-concept before the official API launches.

Whether using a third-party provider or preparing for the official API, certain technical practices are crucial for a robust integration. Understanding Sora 2 API Explained: What Developers Can Do Now & What's Next means building a reliable system around video generation.

When the official API launches, using OpenAI's SDKs for Python or JavaScript will streamline development, handling authentication and request formatting so you can focus on application logic. At Synergy Labs, we specialize in these integrations. If you're planning to use Sora 2, learn How to Get More Out of Custom AI Integration in 5 Simple Steps.

Before diving in, understand the costs, limitations, and ethical guardrails of Sora 2. For businesses leveraging AI, smart deployment is key to gaining a competitive edge. For a broader perspective, see AI-Driven Growth: Transforming Business Innovation and Competition.

Sora 2's power comes with a pay-per-second model that scales with version and resolution. For experimentation, Sora 2 Standard is free via the Sora app, while Sora 2 Pro is bundled with a ChatGPT Pro subscription.

For API access, OpenAI's pricing is expected to be around $0.40 per video, with enterprise plans starting at $2,000+ monthly. Until then, third-party providers offer alternatives, with pricing typically based on video length or resolution.

To put this in perspective, a 12-second 720p video might cost $1.20, while a high-resolution Pro version could be $6.00. A full minute of high-res Pro video could cost $30. These costs add up, so optimizing your generation strategy is crucial.

Sora 2 is impressive but not perfect. OpenAI's own Sora 2 system card notes that "the physics are a bit off" in some cases. You may see artifacts like flicker, distortion, or objects behaving unnaturally.

Text generation remains a weak spot, often resulting in gibberish. While temporal consistency has improved, maintaining perfect character details across long, complex sequences can still be a challenge.

Beyond technical quirks, there are serious ethical risks. The realism of Sora 2's output makes it a powerful tool for deepfake misuse, including impersonation and disinformation. Bias is another critical concern, as AI models can perpetuate harmful stereotypes, as reports have noted regarding sexist and ableist bias in earlier versions. Finally, intellectual property and regulatory uncertainty create potential legal and compliance headaches.

OpenAI has built multiple safety layers into Sora 2, as outlined in their guide to launching Sora responsibly.

Every output carries visible watermarks and embeds C2PA-style content credentials to identify it as AI-generated. It is critical that developers preserve these provenance signals.

The model also includes robust content filtering to block harmful material, such as sexual content, graphic violence, and unauthorized use of public figures' likenesses.

For features like Cameo, OpenAI has implemented a solid consent-based system. Users verify their identity and maintain full control over their likeness. Developers must also adhere to strict usage policies, and implementing human review for all generated content is an essential best practice for enterprise applications.

At Synergy Labs, we prioritize responsible AI integration. For more on leveraging AI safely, explore our guide on Top GPT Wrapper Use Cases for Business Automation in 2025.

Sora 2 is a practical tool that's already reshaping content creation. We're seeing a shift toward specialized AI tools that do one thing exceptionally well, a trend we call the Micro Stack Revolution: Why Startups Are Replacing Platforms with Single-Purpose AI Tools. Sora 2 embodies this by focusing on generating professional-grade video with synchronized audio.

Sora 2 is making a real-world difference by reducing time, cutting costs, and expanding creative possibilities across industries.

These applications show how Sora 2 API Explained: What Developers Can Do Now & What's Next is about rethinking creative pipelines. This shift is also changing user experience, a topic we explore in AI-Native UX: Why the Next Great Products Won't Look Like Apps.

The roadmap for Sora 2 is ambitious. A wider beta is expected in Q3 2025, with a full public release likely in late 2025 or early 2026.

OpenAI's long-term vision extends beyond video generation to what they call "general-purpose world simulators." The goal is to create AI that understands physical laws and cause-and-effect, laying the groundwork for applications like training robotic agents in simulated environments or testing autonomous vehicle algorithms.

The OpenAI announcement frames this as progress toward professional tools, but the implications are much broader. We're watching the early stages of AI systems that can model reality with increasing fidelity.

For developers, the message is clear: prepare now. Teams experimenting with Sora 2 today will have a significant advantage when broader access arrives. This evolution is a critical chapter in The Future of AI Startups: Disrupting Tech Giants, PMF Challenges, AI-Driven Design. The companies that integrate this tech first will define the next wave of innovation.

At Synergy Labs, we track these developments to bridge the gap between interesting technology and production-ready solutions. When Sora 2's API becomes widely available, the developers who understand the landscape will be ready to build the future.

We know you still have questions. Let's tackle the most common ones developers have about the Sora 2 API Explained: What Developers Can Do Now & What's Next landscape.

This is the top question. Currently, there is no direct public API. Access is limited to the invite-only Sora app and a restricted Azure OpenAI preview for enterprises.

Based on OpenAI's statements, a wider beta testing phase is expected around Q3 2025. A full public release, where any developer can get an API key, is tentatively planned for late 2025 or early 2026. These timelines are subject to change as OpenAI scales its infrastructure and refines safety measures.

For experimentation, Sora 2 Standard is your best bet. It's free with generous usage limits through the official Sora app or sora.com (invite required). This is perfect for testing prompts and creating proofs-of-concept.

If you have a ChatGPT Pro subscription, Sora 2 Pro is included at no extra cost on sora.com, offering higher quality output.

For programmatic API access, third-party providers are the only current option, with pricing typically based on video length. When it arrives, the official OpenAI API is expected to cost around $0.40 per video.

Yes, absolutely. This is a key feature that sets Sora 2 apart. It generates a complete audio-visual package, not just silent clips.

The model can create synchronized dialogue, specific sound effects (like footsteps or a door closing), and complex background noise (like ambient traffic). The audio is designed to match the on-screen action, with dialogue syncing to lip movements and sound effects aligning with visual events.

This integrated audio generation is a massive time-saver for creators, as it can eliminate the need for separate audio editing workflows, making it a powerful tool for narrative content.

Sora 2's arrival marks a transformative chapter in AI-driven content creation. We've explored its capabilities for realistic video, synchronized audio, and consistent narratives. However, the real challenge for businesses isn't just understanding the technology, it's bridging the gap between its potential and practical, real-world implementation.

Navigating the landscape of the Sora 2 API Explained: What Developers Can Do Now & What's Next means dealing with limited access, evolving pricing, and crucial ethical considerations. This requires a strategic partner who understands both the technology and the business goals.

At Synergy Labs, we help companies in Miami, Dubai, New York City, and beyond turn cutting-edge AI into robust software. We provide direct access to senior talent who specialize in AI integration, from prompt optimization to preserving content credentials. The gap between "this is amazing" and "this is working in production" is where many projects stall, and it's where we excel.

Our commitment to user-centered design and robust security ensures your AI-powered applications are intuitive, reliable, and trustworthy.

If you're ready to integrate cutting-edge AI like Sora into your applications and build scalable, innovative solutions, explore our AI infusion services. Let's work together to turn AI potential into your business reality. For more on our expertise, visit our Top AI Developers page.

البدء سهل! ما عليك سوى التواصل معنا من خلال مشاركة فكرتك من خلال نموذج الاتصال الخاص بنا. سيرد أحد أعضاء فريقنا في غضون يوم عمل واحد عبر البريد الإلكتروني أو الهاتف لمناقشة مشروعك بالتفصيل. نحن متحمسون لمساعدتك في تحويل رؤيتك إلى حقيقة!

إن اختيار SynergyLabs يعني الشراكة مع وكالة تطوير تطبيقات جوال من الدرجة الأولى تعطي الأولوية لاحتياجاتك. إن فريقنا الذي يتخذ من الولايات المتحدة مقراً له بالكامل مكرس لتقديم تطبيقات عالية الجودة وقابلة للتطوير ومتعددة المنصات بسرعة وبتكلفة معقولة. نحن نركز على الخدمة الشخصية، مما يضمن لك العمل مباشرةً مع كبار المواهب في جميع مراحل مشروعك. إن التزامنا بالابتكار ورضا العملاء والتواصل الشفاف يميزنا عن الوكالات الأخرى. مع SynergyLabs، يمكنك أن تثق في أن رؤيتك ستُنفذ بخبرة وعناية.

عادةً ما نطلق التطبيقات في غضون 6 إلى 8 أسابيع، اعتماداً على مدى تعقيد مشروعك وميزاته. تضمن لك عملية التطوير المبسّطة التي نتبعها طرح تطبيقك في السوق بسرعة مع الاستمرار في الحصول على منتج عالي الجودة.

تتيح لنا طريقتنا في التطوير عبر المنصات المتعددة إنشاء تطبيقات الويب والهاتف المحمول في نفس الوقت. وهذا يعني أن تطبيقك على الهاتف المحمول سيكون متاحاً على كل من نظامي iOS وAndroid، مما يضمن لك انتشاراً واسعاً وتجربة مستخدم سلسة على جميع الأجهزة. يساعدك نهجنا على توفير الوقت والموارد مع زيادة إمكانات تطبيقك إلى أقصى حد.

في SynergyLabs، نستخدم مجموعة متنوعة من لغات البرمجة وأطر العمل لتناسب احتياجات مشروعك على أفضل وجه. بالنسبة للتطوير عبر المنصات، نستخدم لغة Flutter أو Flutterflow، والتي تتيح لنا دعم الويب وأندرويد وiOS بكفاءة من خلال قاعدة برمجة واحدة - وهي مثالية للمشاريع ذات الميزانيات المحدودة. بالنسبة للتطبيقات المحلية، نستخدم سويفت لتطبيقات iOS وكوتلين لتطبيقات أندرويد.

بالنسبة لتطبيقات الويب، نجمع بين أطر تخطيط الواجهة الأمامية مثل Ant Design أو Material Design مع React. على الواجهة الخلفية، نستخدم عادةً Laravel أو Yii2 للمشاريع المتجانسة، و Node.js للبنى بدون خادم.

بالإضافة إلى ذلك، يمكننا دعم العديد من التقنيات، بما في ذلك Microsoft Azure وGoogle Cloud وFirebase وAmazon Web Services (AWS) وReact Native وDocker وNGINX وApache وغيرها. تُمكِّننا مجموعة المهارات المتنوعة هذه من تقديم حلول قوية وقابلة للتطوير مصممة خصيصاً لتلبية متطلباتك الخاصة.

الأمن أولوية قصوى بالنسبة لنا. نحن ننفذ تدابير أمنية متوافقة مع معايير الصناعة، بما في ذلك تشفير البيانات، وممارسات الترميز الآمنة، وعمليات التدقيق الأمني المنتظمة، لحماية تطبيقك وبيانات المستخدم.

نعم، نحن نقدم الدعم والصيانة والتحديثات المستمرة لتطبيقك. بعد الانتهاء من مشروعك، ستتلقى ما يصل إلى 4 أسابيع من الصيانة المجانية لضمان سير كل شيء بسلاسة. بعد هذه الفترة، نوفر خيارات دعم مستمرة مرنة مصممة خصيصاً لتلبية احتياجاتك، حتى تتمكن من التركيز على تنمية أعمالك بينما نتولى نحن صيانة تطبيقك وتحديثاته.